Test Driven Configuration?

Test Driven Development (TDD) is the software development practice of defining business requirements as small, repeatable tests in the form of code. First a test is written followed by the code that satisfies that test. Every time a new feature is developed, the entire suite of existing tests is checked. The new code is not considered complete until all tests pass. By following this process we have greater assurance that new code has not broken existing features.

Software development is an important IT function at Bates, but we are often tasked with supporting third-party systems ranging from open source, proprietary and Software as a Service offerings. Fortunately, the practice of TDD can be equally applicable to system configuration as it is software development!

The challenges of configuring a complex system

At Bates we host the open-source Learning Management System (LMS), Moodle. This system supports a number of course related functions for faculty and students including document distribution, forums. quizzes and electronic assignment submission.

Moodle is a highly configurable system; at last count it has over 2,800 configurable settings. This number doesn’t even include the over 600 capabilities that can be assigned to any number of roles within the system to govern what users are allowed to do. The permutations of possible configuration combinations are astronomical.

To add to this complexity, implementing a business requirement often necessitates modifying a number of configuration settings. For example, disabling Moodle’s Messaging system (user chat) requires two configuration changes found on two distinct screens. Unless documented somewhere outside the system, you’d likely not know that these two changes were related to this one requirement. You may also fail to notice that both are required changes if at a later date you wish to enable this feature again.

As more and more business requirements are added so too grows the number of configuration changes and also the likelihood that some prior change could be undone. How can you be sure that your most recent change didn’t invalidate a past requirement? If you look back at a setting several years from now, will you know why it’s set the way it is?

Writing tests keeps complexity under control

All of the problems detailed above were problems we encountered while reimplementing Moodle this year. The business reasons for configurations made over the lifetime of the application were not well documented. Now that we were starting fresh, we wanted to document our decisions and also be able to quickly check that the resulting changes were in place.

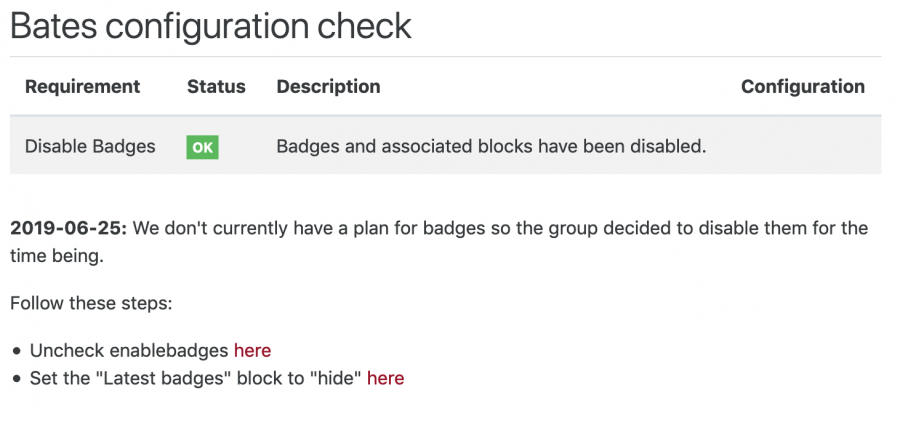

Taking inspiration from TDD we set out to define our business requirements as tests. Fortunately, Moodle is highly extendable through its plugin architecture. We repurposed one of its delivered reporting plugins and developed it into our testing harness. Here is an example of a test (referred to below as a “requirement”) for verifying the “Badges” feature is disabled:

Each test has a name, status indicating whether the necessary configuration state is in place, and a short description of the current state. If configuration option is not set as expected that would be listed here along with a link to the page within the system where it can be adjusted. Below these values is the date the requirement was defined and the reason for it (and often the names of those who defined it). Specific instructions about how to apply these changes are also included.

These tests are small code blocks written to validate configuration state using Moodle’s programming API. Developing the first few tests took some time to get working but patterns quickly emerged that allowed new tests to be written much more quickly.

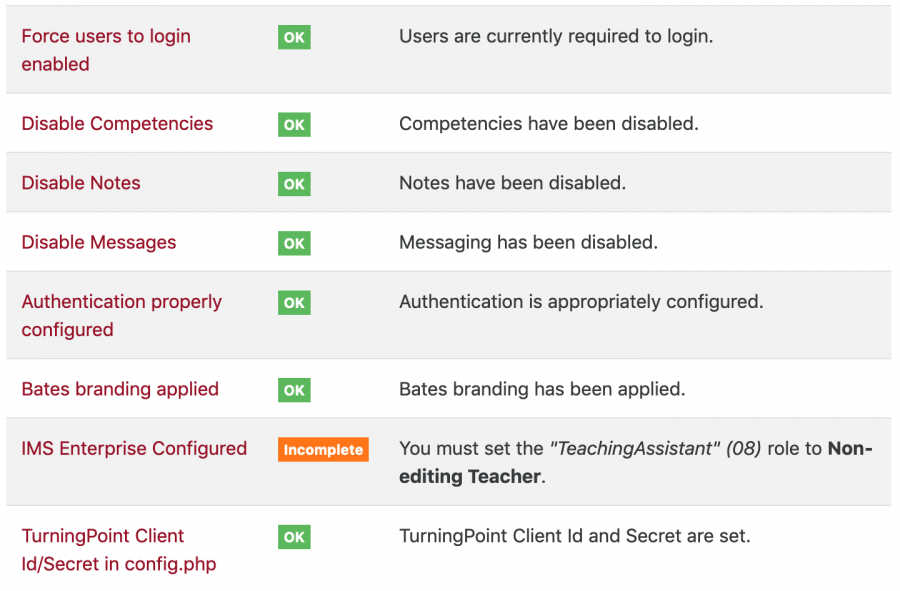

The main page of our configuration test report lists the status of requirements we’ve defined to and their status. We have at least 30 defined currently but the screenshot below shows a smaller snippet:

So now we can instantly verify whether all of our defined configurations are in place. Whenever we defined a new requirement and apply the configuration, we can verify all existing configurations still hold.

So what if a test fails? We can look back at the requirement and see why we applied these configuration changes in the first place and which specific configuration option (or options) is at fault. From there we can determine if this change was a mistake or if our requirements have changed. In the requirements have changed then our test can be modified to reflect our new desired state.

These tests are especially powerful for verifying security configuration. For example, we define an “Auditor” role that is essentially the same as the “Student” role but with a few small modifications. Over time we often determine that the Student role should be changed to either include or exclude some capability based a problem raised during daily use. It’s easy to forget that this same change should be applied to the Auditor role as well. We’ve written tests to verify the specific differences between roles and those tests will fail if any unexpected differences are found.

Conclusion

Configuration tests are a powerful form of documentation that can provide instant validation of expected state. For a small investment of time upfront they allow us to make changes with greater confidence.

As more software is provided as Software as as Service, the importance of configuration management will only grow. It’s important when evaluating systems that the capability to programmatically assess configuration state is available via well documented API or plugin architectures.

A testing framework need not be sophisticated. As shown by our Moodle example, it’s possible to implement a reasonable system without a complicated TDD framework in place. What’s important is that requirements are being defined somewhere and that it’s easy to validate them.