The college’s new high-performance computing setup is not visually dazzling, at least compared with the huge computer we remember from Batman’s Batcave, circa 1968. It occupies barely half of a 6-foot-tall computer rack.

But then again, the computing setup, known as a “cluster” because it links 12 separate computers, doesn’t need to fill a whole cave. And perhaps most important, it’s a shared resource — not reserved for one or two superheroes.

“Ours is for anyone who has the need to examine data in a deep way.”

While many colleges have high-performance computing clusters, it’s not uncommon for them to be appropriated by a few faculty members (superheroes, as it were).

Bates’ HPCC, however, is designed as a “community-based resource as opposed to one that would just benefit specific faculty members,” explains Andrew White, director of academic and client services for Information and Library Services. “Ours is for anyone who has the need to examine data in a deep way.”

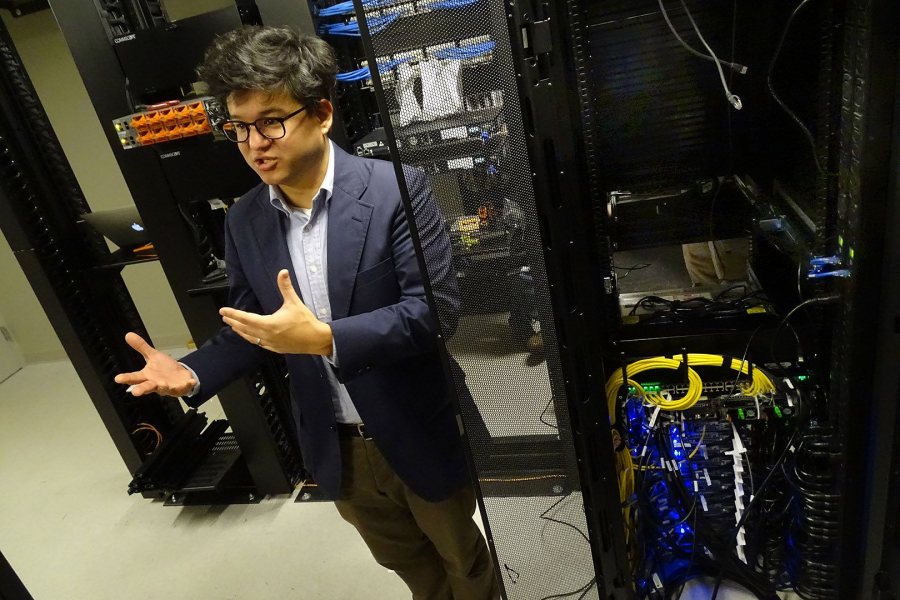

Jeffrey Oishi, the college’s new computational astrophysicist, visits the HPCC where it lives: in a ground-floor hub room in Kalperis Hall at 65 Campus Ave. (Jay Burns/Bates College)

One of those people is Jeff Oishi, the college’s new computational astrophysicist. He’ll be a power user, and some of his research funds helped to purchase the HPCC, which sits in a 15-by-17 hub room in the basement of Kalperis Hall, one of the college’s two new residences on Campus Avenue.

Oishi calls the HPCC “the once and future” of computing. “Once” because sharing was once the way to access powerful computing, and “future” because it anticipates how Bates will use and expand the HPCC.

When it comes to an HPCC, “high performance” has the same meaning as it does for a 1971 Plymouth Hemi Cuda muscle car. It means extraordinary power.

For Oishi and his student researchers, this computing power will run models that explain how gases flow inside the atmospheres of giant planets like Jupiter. In one project, they will use an approximation known as linearization to model how gases go from stable to unstable. In a second project, Oishi’s team will do 3D simulations of these transitions.

When a big job comes to the HPCC from Oishi’s lab in Carnegie Science, the job goes first to one of the 12 computers, each of which are known as “nodes.” The receiving node, called the “head node,” is the traffic cop that manages requests and draws on the other 11 nodes’ computing power as needed.

In computer parlance, this gabfest is called “all-to-all” communication and it’s a hallmark of high-performance computing.

“The head node splits up the job, with pieces going to everybody else,” explains Jim Bauer, director of network and infrastructure services for ILS. “The nodes all communicate with each other, assemble the results, and send it back to the head node.”

As the job gets crunched and all the processors inside all the nodes swing into action, each processor is “talking with every other processor all the time,” Oishi says. It’s an overlapping conversation, like a Robert Altman movie. In computer parlance, this gabfest is called “all-to-all” communication and it’s a hallmark of high-performance computing.

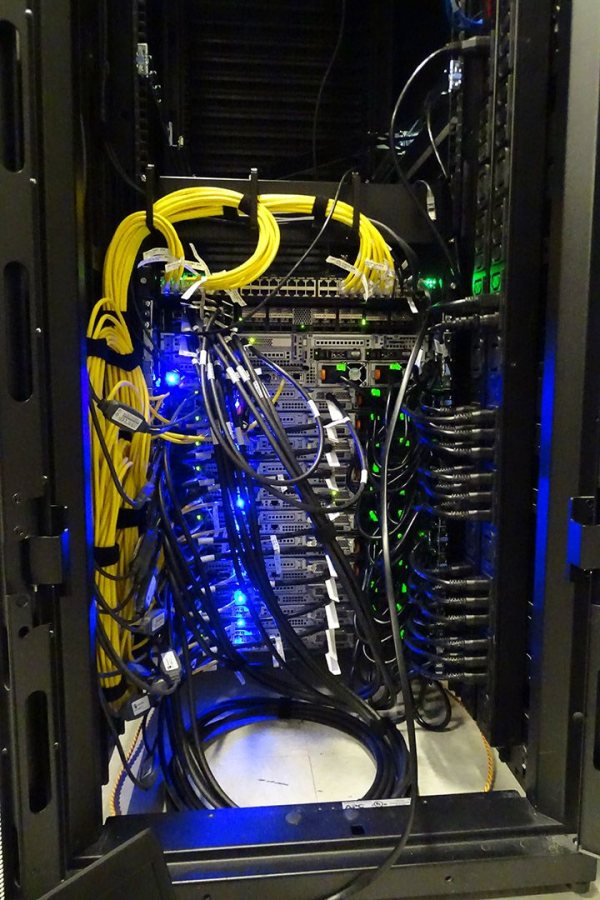

The speed of this conversation depends on a dazzling array of pricey cables that connect the head node to the other nodes and each node to one another. So while you can use $2 HDMI cables for your home theater, in this case, “cables do matter,” says Bauer. Collectively called “fabric,” all this high-speed networking allows data to whip around at 100 gigabytes per second, the gold standard of high-speed computing.

High-speed (100 gigabytes per second) cables and networking, known as “fabric,” connect the 12 nodes of the HPCC.

The Bates fabric is distinctive, Bauer says. “We went with new technology,” known as Intel Omni-Path, “that is more cost-effective and twice as fast” as typical fabric uses in an HPCC.

In terms of computing specs, each of the 12 nodes in the Bates HPCC has 28 cores, the processing units that do the work, for a total of 336 cores. A team from Dell helped Bates design the setup, and it has 1.5 terabytes of RAM and 48 terabytes of disk space.

By historical comparison, the first academic computer that Bates purchased, in 1979, was a Prime 550 with roughly three-quarters of a megabyte of RAM and 300 megabytes of storage space.

“It was a leader of its day,” says Bauer, and it could process 700,000 “instructions” per second. “The HPCC runs at just under six billion instructions per second.”

That’s impressive, but computing power is relative, of course. To meet computing needs that are even bigger, Oishi uses NASA’s Pleiades Supercomputer, which has nearly 200,000 cores compared to Bates’ 336. “But otherwise, it’s built on nodes almost identical to ours,” he says.

As he meets the Bates HPCC for the first time, Oishi says he’s “impressed by how small the cluster is” compared with some that he’s used in the past.

Since computers today use so much less power than their forebears, they give off less heat, which means the components of an HPCC can be packed close together — notwithstanding the air-conditioning that blasts away from one corner of the room.

Had Oishi arrived at Bates as a solo computational physicist, the college might have created a one-off HPC setup for him. But Oishi — who is the co-principal investigator of a NASA grant that will send $114,000 to Bates to support his research on stellar magnetism — was joined this fall by another new assistant professor of physics, Aleks Diamond-Stanic.

Himself the holder of a $108,000 grant from the Space Telescope Science Institute to study the stellar mass of starbursts, Diamond-Stanic uses big data from the Hubble Space Telescope and the Sloan Digital Sky Survey to study the evolution of galaxies and supermassive black holes. So he, too, had big-time computing needs.

The Bates HPCC setup is known as the ‘condo’ model: While the infrastructure belongs to the college and professors sort of “buy into it.”

The pair’s arrival — and the anticipation that more and more faculty will need powerful computing resources — created a critical mass for the HPCC project, says White. “When Jeff talked to us about his needs, and Aleks talked about his needs, we saw a way to support them while serving the larger Bates community, too.”

Assistant Professor of Physics Aleks Diamond-Stanic will use the HPCC to crunch big data from the Hubble Space Telescope and the Sloan Digital Sky Survey. (Josh Kuckens/Bates College)

In fact, White, Bauer, and others had already been testing some ideas for HPC at Bates. “Thanks to Aleks and Jeff, we went from zero to 60 just like that,” White says.

The Bates HPCC setup is known as the ‘condo’ model: The infrastructure belongs to the college, and professors sort of “buy into it,” Oishi says. That is, he and Diamond-Stanic, have contributed some of their startup funds — research dollars that the college provides to new faculty — to purchase new nodes. In return, they’ll have priority access.

”I think that’s a really great model because it’s expandable and it’s flexible,” says Oishi. Indeed, the fact that the HPCC rack is currently only half-full anticipates that more faculty will buy into the “condo,” especially professors in the college’s new Digital and Computational Studies Program, slated to debut as a major in fall 2018.

In that sense, the Bates HPCC will “support our current faculty and help us attract new colleagues,” says White.

Thirty years ago, if you wanted access to powerful computing, you tapped into a shared resource. By the 1990s, when Oishi was at the American Museum of Natural History, things had changed.

At the museum, he worked with a computational biologist, Ward Wheeler, who was custom-building his own HPCCs to research the evolution of tree DNA over the past 500 million years. Ward’s budget wasn’t huge, Oishi recalls, “but he realized that he could order parts,” such as processors and cases “from a commodity source, get a team of people in his office with screwdrivers, and put them all together over a weekend.”

Fast forward a decade, to when Oishi was a postdoc at Berkeley, and every researcher with any kind of budget was building their own HPCC. “It fell to the faculty member or a grad student to maintain them because the IT department would say, ‘Do whatever you want but we’re not touching that. You brought it in, you built it, you put it in your closet.’ And we were running out of closet space.”

That created waste and redundancy, so by around 2010 colleges and universities began to move back to offering shared high-performance computing resources. “They stepped in and said, ‘Let us do this for you,’” Oishi says.